Faster, smarter, further responsive AI applications – that’s what your clients anticipate. Nonetheless when large language fashions (LLMs) are gradual to answer, particular person experience suffers. Every millisecond counts.

With Cerebras’ high-speed inference endpoints, it’s possible you’ll cut back latency, velocity up model responses, and protect top quality at scale with fashions like Llama 3.1-70B. By following only a few straightforward steps, you’ll be able to customise and deploy your private LLMs, offering you with the administration to optimize for every velocity and top quality.

On this weblog, we’ll stroll you via you learn to:

- Prepare Llama 3.1-70B inside the DataRobot LLM Playground.

- Generate and apply an API key to leverage Cerebras for inference.

- Customise and deploy smarter, sooner functions.

By the highest, you’ll be capable of deploy LLMs that ship velocity, precision, and real-time responsiveness.

Prototype, customise, and test LLMs in a single place

Prototyping and testing generative AI fashions sometimes require a patchwork of disconnected devices. Nonetheless with a unified, integrated environment for LLMs, retrieval strategies, and evaluation metrics, it’s possible you’ll switch from idea to working prototype sooner and with fewer roadblocks.

This streamlined process means it’s possible you’ll give consideration to establishing environment friendly, high-impact AI functions with out the hassle of piecing collectively devices from fully totally different platforms.

Let’s stroll by way of a use case to see how one can leverage these capabilities to develop smarter, faster AI applications.

Use case: Dashing up LLM interference with out sacrificing top quality

Low latency is essential for establishing fast, responsive AI functions. Nonetheless accelerated responses don’t have to return again on the value of top quality.

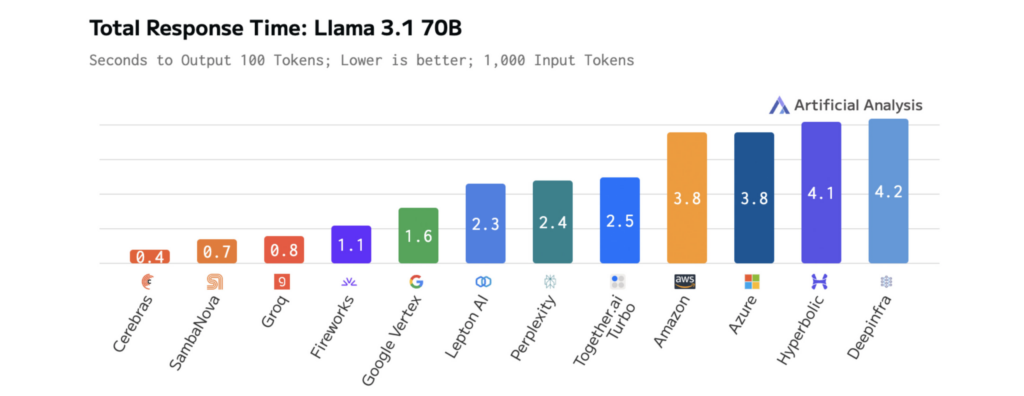

The rate of Cerebras Inference outperforms totally different platforms, enabling builders to assemble functions that actually really feel straightforward, responsive, and intelligent.

When combined with an intuitive enchancment experience, it’s possible you’ll:

- Cut back LLM latency for sooner particular person interactions.

- Experiment further successfully with new fashions and workflows.

- Deploy functions that reply instantly to particular person actions.

The diagrams beneath current Cerebras’ effectivity on Llama 3.1-70B, illustrating sooner response events and reduce latency than totally different platforms. This permits speedy iteration all through enchancment and real-time effectivity in manufacturing.

How model measurement impacts LLM velocity and effectivity

As LLMs develop greater and further superior, their outputs grow to be further associated and full — nonetheless this comes at a value: elevated latency. Cerebras tackles this drawback with optimized computations, streamlined data change, and intelligent decoding designed for velocity.

These velocity enhancements are already reworking AI functions in industries like prescribed drugs and voice AI. For example:

- GlaxoSmithKline (GSK) makes use of Cerebras Inference to hurry up drug discovery, driving better productiveness.

- LiveKit has boosted the effectivity of ChatGPT’s voice mode pipeline, reaching sooner response events than typical inference choices.

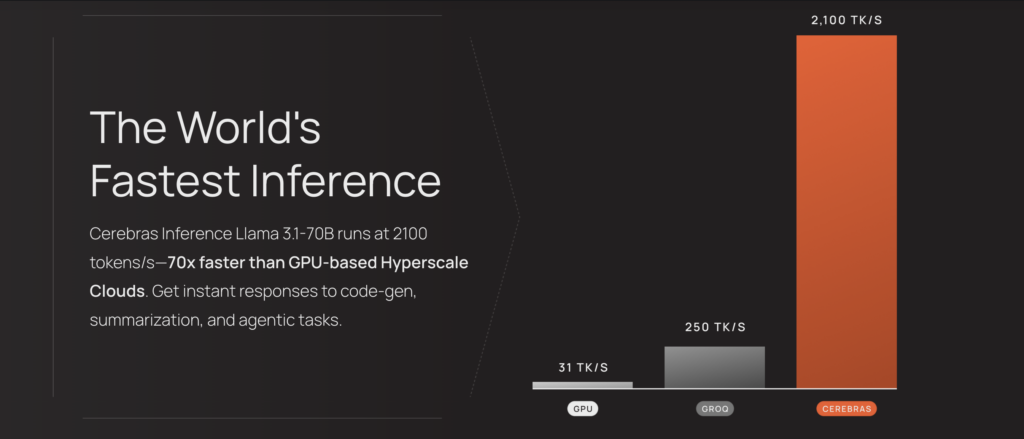

The outcomes are measurable. On Llama 3.1-70B, Cerebras delivers 70x sooner inference than vanilla GPUs, enabling smoother, real-time interactions and sooner experimentation cycles.

This effectivity is powered by Cerebras’ third-generation Wafer-Scale Engine (WSE-3), a personalized processor designed to optimize the tensor-based, sparse linear algebra operations that drive LLM inference.

By prioritizing effectivity, effectivity, and suppleness, the WSE-3 ensures sooner, further fixed outcomes all through model effectivity.

Cerebras Inference’s velocity reduces the latency of AI functions powered by their fashions, enabling deeper reasoning and further responsive particular person experiences. Accessing these optimized fashions is straightforward — they’re hosted on Cerebras and accessible by means of a single endpoint, so you possibly can start leveraging them with minimal setup.

Step-by-step: How one can customise and deploy Llama 3.1-70B for low-latency AI

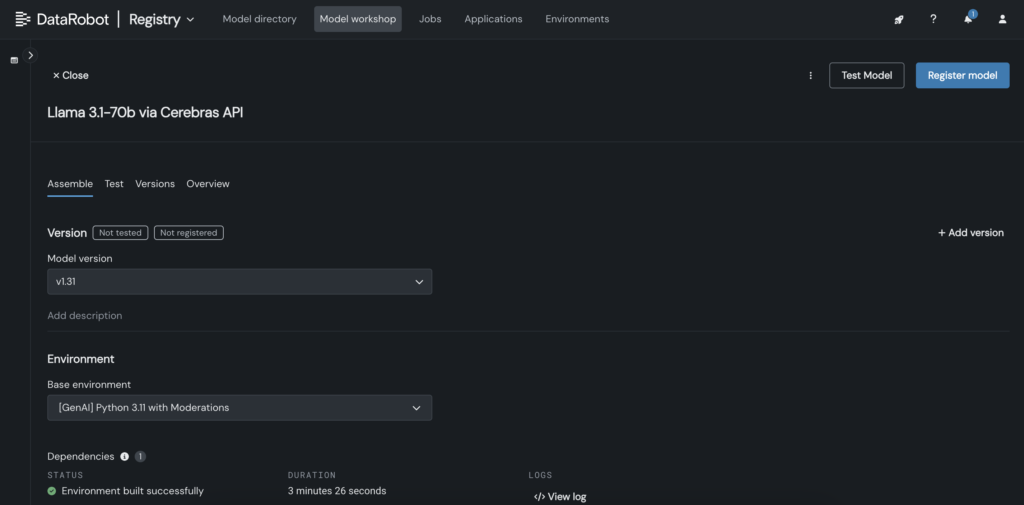

Integrating LLMs like Llama 3.1-70B from Cerebras into DataRobot means you could customise, test, and deploy AI fashions in just a few steps. This course of helps sooner enchancment, interactive testing, and better administration over LLM customization.

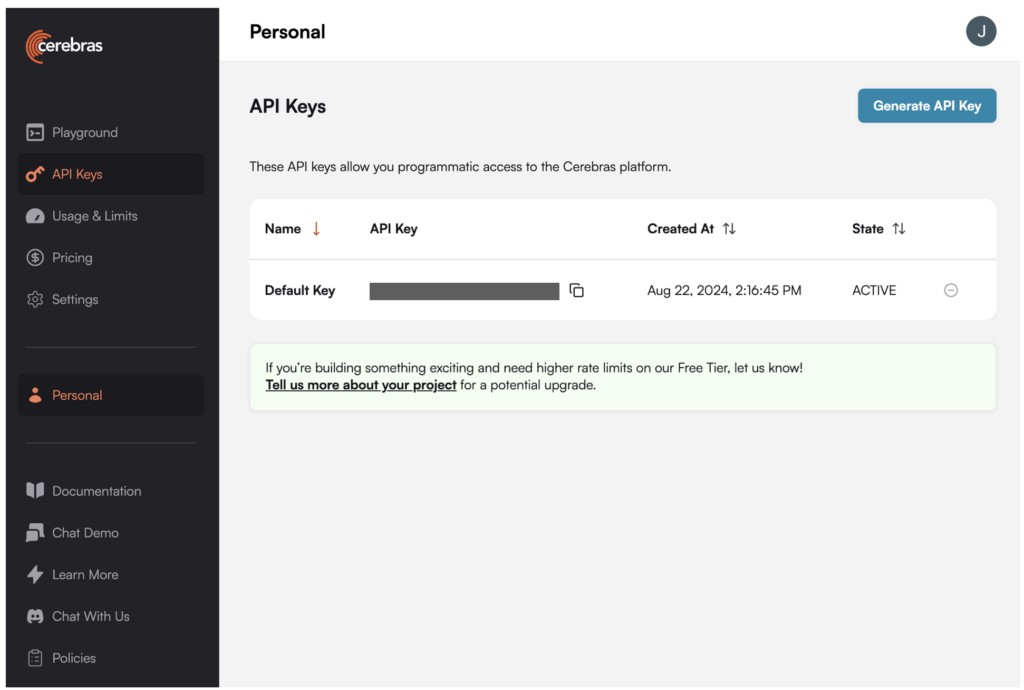

1. Generate an API key for Llama 3.1-70B inside the Cerebras platform.

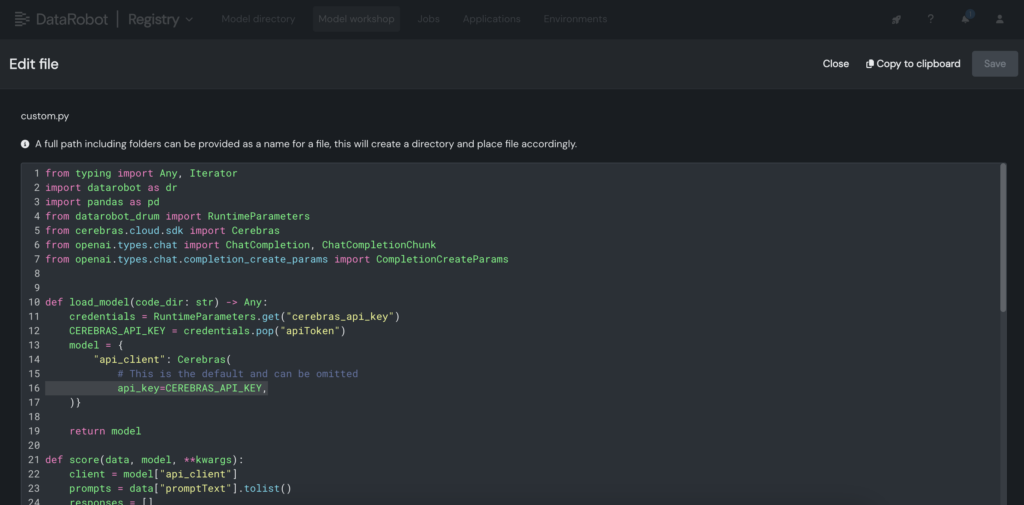

2. In DataRobot, create a personalized model inside the Model Workshop that calls out to the Cerebras endpoint the place Llama 3.1 70B is hosted.

3. Contained in the personalized model, place the Cerebras API key all through the personalized.py file.

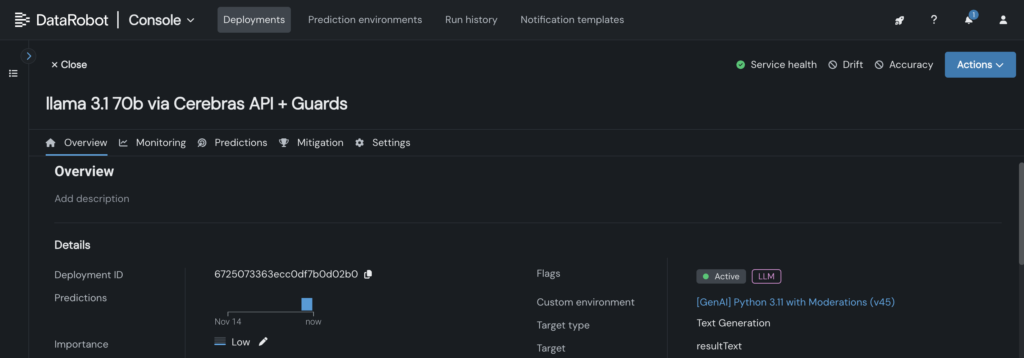

4. Deploy the personalized model to an endpoint inside the DataRobot Console, enabling LLM blueprints to leverage it for inference.

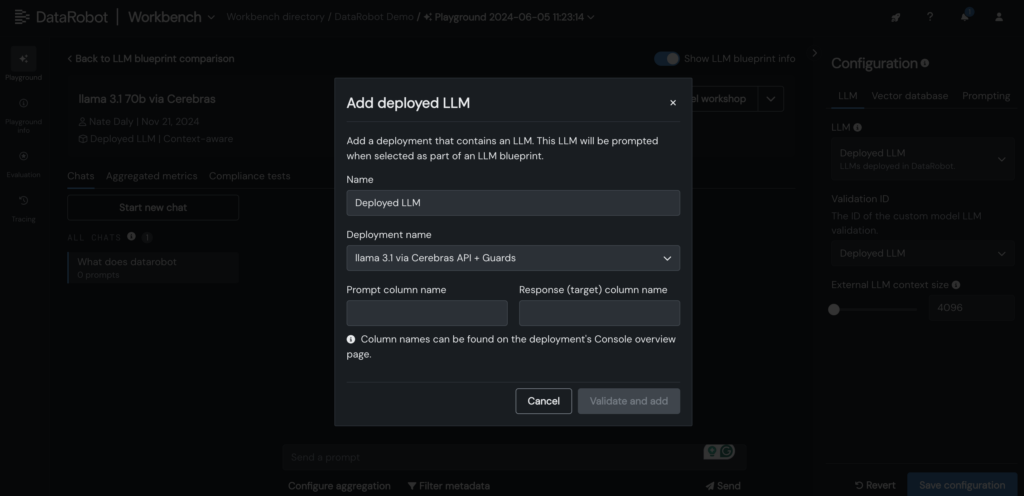

5. Add your deployed Cerebras LLM to the LLM blueprint inside the DataRobot LLM Playground to begin out chatting with Llama 3.1 -70B.

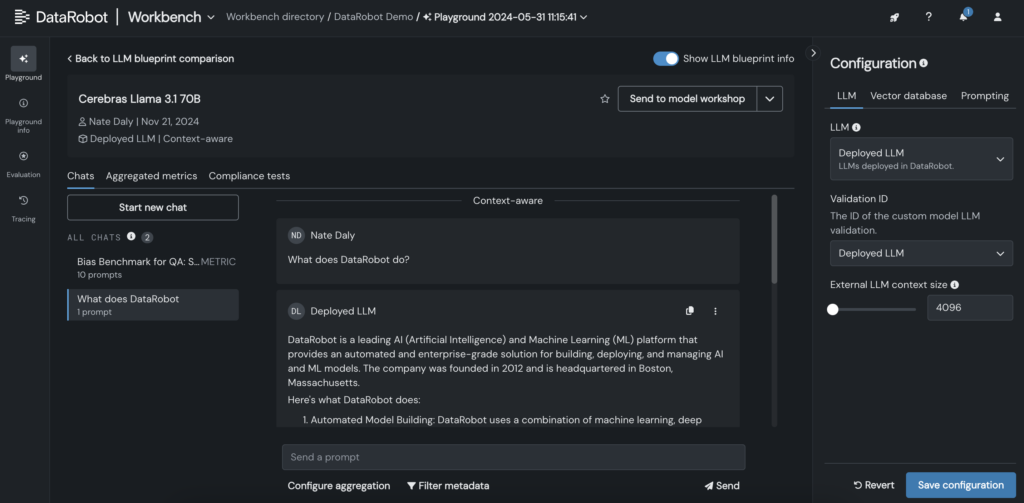

6. As quickly because the LLM is added to the blueprint, test responses by adjusting prompting and retrieval parameters, and consider outputs with totally different LLMs straight inside the DataRobot GUI.

Develop the bounds of LLM inference to your AI functions

Deploying LLMs like Llama 3.1-70B with low latency and real-time responsiveness isn’t any small course of. Nonetheless with the appropriate devices and workflows, it’s possible you’ll acquire every.

By integrating LLMs into DataRobot’s LLM Playground and leveraging Cerebras’ optimized inference, it’s possible you’ll simplify customization, velocity up testing, and cut back complexity – all whereas sustaining the effectivity your clients anticipate.

As LLMs develop greater and further extremely efficient, having a streamlined course of for testing, customization, and integration, shall be essential for teams in search of to maintain ahead.

Uncover it your self. Entry Cerebras Inference, generate your API key, and start establishing AI applications in DataRobot.

Regarding the creator

Kumar Venkateswar is VP of Product, Platform and Ecosystem at DataRobot. He leads product administration for DataRobot’s foundational corporations and ecosystem partnerships, bridging the gaps between setting pleasant infrastructure and integrations that maximize AI outcomes. Earlier to DataRobot, Kumar labored at Amazon and Microsoft, along with important product administration teams for Amazon SageMaker and Amazon Q Enterprise.

Nathaniel Daly is a Senior Product Supervisor at DataRobot specializing in AutoML and time assortment merchandise. He’s focused on bringing advances in data science to clients such that they are going to leverage this value to unravel precise world enterprise points. He holds a stage in Arithmetic from Faculty of California, Berkeley.